SMOTE Algorithm

This short blog post relates to addressing a problem of imbalanced datasets. An imbalanced dataset is a dataset where the classes are not approximately equally represented. These are common in the areas of medical diagnosis, fraud detection, credit risk modeling, etc. For example, in building scorecards, it is not uncommon to see that the number of defaulted customers is dominated by the number of non-default cases (this actually means that loan originators do their job well and reject the majority of bad deals at the outset). Why should we care if our dataset contains imbalanced data? There are a number of common issues:

- The model may prioritize classifying the majority class accurately and accuracy, as a measure of model performance, may no longer be used. For example, suppose that the number of default cases represents 2% of the total number of observations. In this case, a simple model that always predicts the majority class, i.e. a non-default event, is going to achieve 98% accuracy. However, the value of such a model in decision making is questionable at best.

- In real-world domains, imbalanced datasets usually arise as a consequence of building decision systems trying to distinguish between common occurrences and rare but costly alternatives. As an example, consider the problem of accurately diagnosing cancer. The rate of new incidents of cancer is around 0.44%, according to National Cancer Institute. However, the costs associated with making a Type I error (classifying a healthy patient as having cancer) as compared to the costs of committing a Type II error, i.e. failing to diagnose cancer in people with the disease, might vary significantly. The latter is likely to be much higher as it may lead to a loss of precious time in starting a chemotherapy and may lead to death.

Over the years, scientific community has devised a number of ways to address the problem. Roughly, these can be split into algorithm-driven and data-driven solutions. Algorithm-driven solutions work by altering the way algorithms, which are used to build predictive systems, work. For example, a data scientist may change the costs associated with committing Type I and Type II errors in an attempt to make an algorithm aware of the possible trade-offs. Alternatively, boosting algorithms may be used.

On the other hand, data-driven methods work by artificially increasing (decreasing) observations belonging to the minority (majority) class. In particular, we are going to demonstrate the Synthetic Minority Over-sampling Technique (SMOTE) as was proposed by Chawla et al. (2002). The algorithm works by oversampling the minority class by generating synthetic observations along the line segments connecting the nearest minority class neighbors in the feature space.

To demonstrate how the technique can be implemented in Python, we would require the following libraries.

import pandas as pd

import numpy as np

from sklearn.datasets import make_classification

from sklearn.model_selection import train_test_split

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import f1_score

from sklearn.metrics import confusion_matrix

from imblearn.over_sampling import SMOTE

First things first, we need to get an imbalanced dataset. To do that, we are going to make use of make_classification functionality of scikit-learn library and generate a dataset consisting of 1,000 observations belonging to 2 classes. The majority class, class 0, will represent 98% of all observations. For the sake of simplicity, only 2 informative independent variables are used. This will also make visualizations possible.

X, y = make_classification(n_samples=1000, n_features=2, n_informative=2, n_redundant=0, n_repeated=0, weights=[0.98], class_sep=0.7, random_state=4)

x_train, x_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=7, stratify=y)

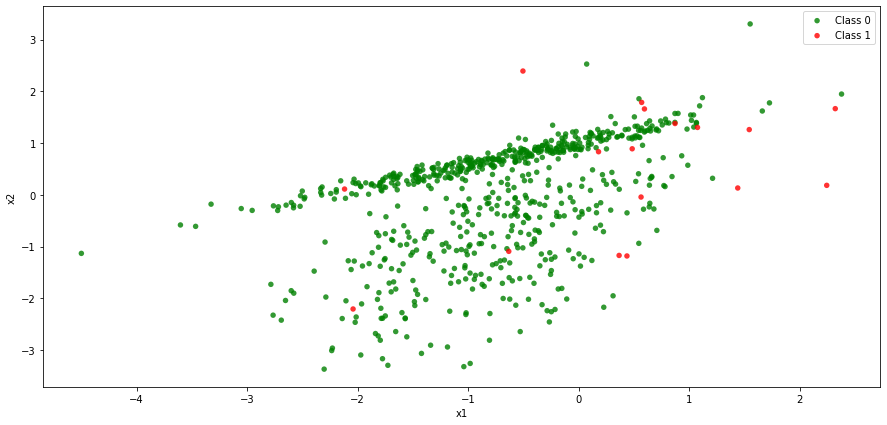

Let us create a simple scatter plot and see how the two classes are distributed in the feature space.

g1 = x_train[y_train==0]

g2 = x_train[y_train==1]

data = (g1, g2)

colors = ('green', 'red')

groups = ('Class 0', 'Class 1')

fig = plt.figure(figsize=[15, 7])

ax = fig.add_subplot(1, 1, 1)

for datum, color, group in zip(data, colors, groups):

ax.scatter(datum.T[0], datum.T[1], alpha=0.8, c=color, edgecolors='none', s=30, label=group)

ax.set_xlabel('x1')

ax.set_ylabel('x2')

plt.legend(loc='best')

The scatter plot clearly shows that class 0 observations dominate over class 1 observations and it may be hard for us to devise a rule that would find an accurate bound separating the two classes.

Let us now fit the logistic regression model and assess its performance on the test set.

model1 = LogisticRegression()

model1.fit(x_train, y_train)

model1.score(x_train, y_train)

0.9771428571428571

The obtained accuracy on the training set is almost 98% and it may seem that the algorithm does a good job at distinguishing between the two classes. Can we expect the same accuracy on the test set?

model1.score(x_test, y_test)

0.9766666666666667

The accuracy on the test is the same as on the training set indicating that overfitting is an unlikely issue in our case. However, as we mentioned in the introduction, accuracy may not be the right performance metric to use. For that reason, we are going to utilize the F1 score metric. For those who do not remember, F1 score is the harmonic mean of precision and recall:

\[ F1 \hspace{1mm} score = 2 \times \frac{precision \times recall}{precision + recall}, \]

where

\[ precision = \frac{True \hspace{1mm} positive}{True \hspace{1mm} positive + False \hspace{1mm} positive} \]

and

\[ recall = \frac{True \hspace{1mm} positive}{True \hspace{1mm} positive + False \hspace{1mm} negative}. \]

Let us now see what value of F1 score the fitted logistic regression achieves on the test set.

f1_score(model1.predict(x_test), y_test)

0.0

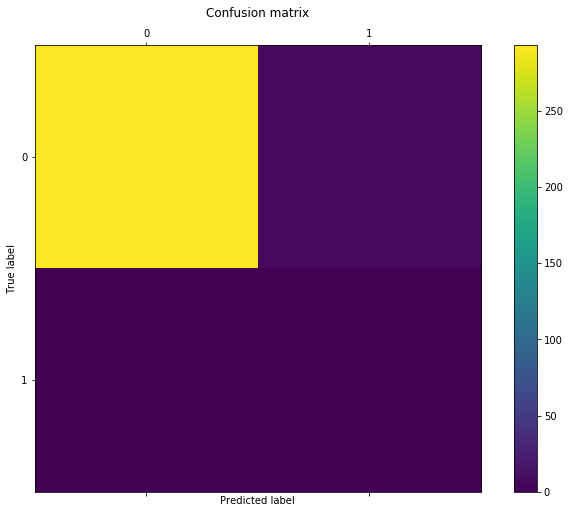

The obtained value of 0 means that both precision and recall are equal to 0 or, in other words, the are no true positives, and the algorithm failed to correctly identify a case belonging to class 1. The next step is to investigate the associated confusion matrix.

conf = confusion_matrix(model1.predict(x_test), y_test)

conf

[293, 7],

[0, 0]

fig1 = plt.figure(figsize=[12, 8])

plt.matshow(conf, fignum=1)

plt.title('Confusion matrix')

plt.colorbar()

plt.ylabel('True label')

plt.xlabel('Predicted label')

plt.show()

As we may infer from the above heat map, the logistic regression predicted that all 300 cases in the test set belong to class 0. This rule resulted in the algorithm being correct 293 times out of 300 and thence the correspondingly high accuracy of almost 98%. However, if we think about the problem in terms of cancer diagnosis, using this algorithm would mean that 7 patients would go away with the disease undetected, which is a horrible performance that would lead to very costly consequences both for the hospital and the patients.

The poor performance of the algorithm is the direct consequence of the imbalanced nature of the dataset. Instead, we are now going to use the SMOTE algorithm to synthetically increase the proportion of the minority class cases and make it equal to 50%.

smote = SMOTE(sampling_strategy=1, k_neighbors=10, random_state=4)

x_resampled, y_resampled = smote.fit_resample(x_train, y_train)

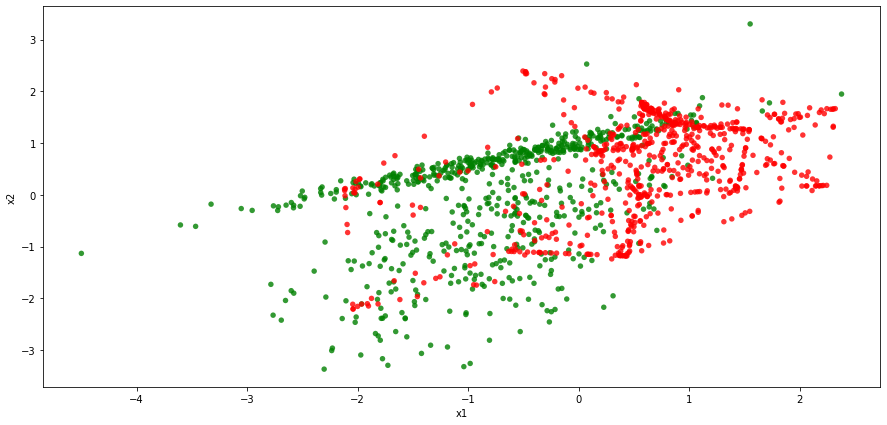

To generate synthetic observations from the minority class, we looked at the 10 nearest neighbors of a given minority class case in the initial dataset. The figure below demonstrates how the classes are distributed in the feature space following the treatment.

fig2 = plt.figure(figsize=[15, 7])

ax2 = fig2.add_subplot(1, 1, 1)

ax2.set_xlabel('x1')

ax2.set_ylabel('x2')

g1 = x_resampled[y_resampled==0]

g2 = x_resampled[y_resampled==1]

data = (g1, g2)

for datum, color, group in zip(data, colors, groups):

ax2.scatter(datum.T[0], datum.T[1], alpha=0.8, c=color, edgecolors='none', s=30, label=group)

plt.show()

While there is still a certain degree of overlap between the two classes in the augmented dataset, it looks that the separating rule may be easier to devise now. As such, observations belonging to class 1 tend to be associated with higher values if x1 and higher values of x2.

To check if the updated dataset is better suited for training a predictive model, we are going to refit a logistic regression model and asses it performance.

model1.fit(x_resampled, y_resampled)

model1.score(x_resampled, y_resampled)

0.8023426061493412

The resulting accuracy on the training set is now 80% down from almost 98% in the original version of the dataset.

model1.score(x_test, y_test)

0.74

The obtained accuracy on the test set is 74%. Note that we did not alter the test set to facilitate comparison.

f1_score(model1.predict(x_test), y_test)

0.13333333333333333

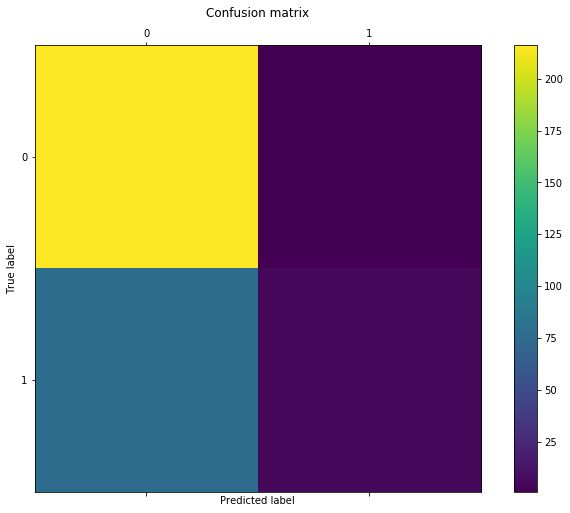

The value of F1 metric is now 0.13 meaning that the model now manages to correctly identify classes belonging to class 1. To see what exactly the fitted model predicts, we are going to have a look at the associated confusion matrix.

conf = confusion_matrix(model1.predict(x_test), y_test)

conf

[216, 1],

[77, 6]

fig1 = plt.figure(figsize=[12, 8])

plt.matshow(conf, fignum=1)

plt.title('Confusion matrix')

plt.colorbar()

plt.ylabel('True label')

plt.xlabel('Predicted label')

plt.show()

We now observe that the fitted model spends more effort in trying to identify observations belonging to class 1. As such, it predicted that 83 (77 + 6) out of 300 observations belong to class 1. Furthermore, only a single class 1 observation has been incorrectly labeled as belonging to class 0. However, one should also note that this increase in the true positive rate comes at a cost of higher false positive rate, i.e. higher number of observations belonging to class 0 classified as belonging to class 1.

Summing up, we can see that the SMOTE oversampling algorithm allows us to shift the weight from the majority class and make the algorithm more aware of the minority class. Such treatment resulted in a higher F1 score metric at a cost of a reduction in accuracy.

References

Chawla, N.V., Bowyer, K.V., Hall, L.O. and Kegelmeyer, W. P. SMOTE: Synthetic minority oversampling technique. Journal of Artificial Intelligence Research, 2002, 16, pp.321-357

Kotsiantis, S., Pintelas, P.E., Kanellopoulos, D. Handling imbalanced datasets: A review. GESTS International Transactions on Computer Science and Engineering, 2006, 30.